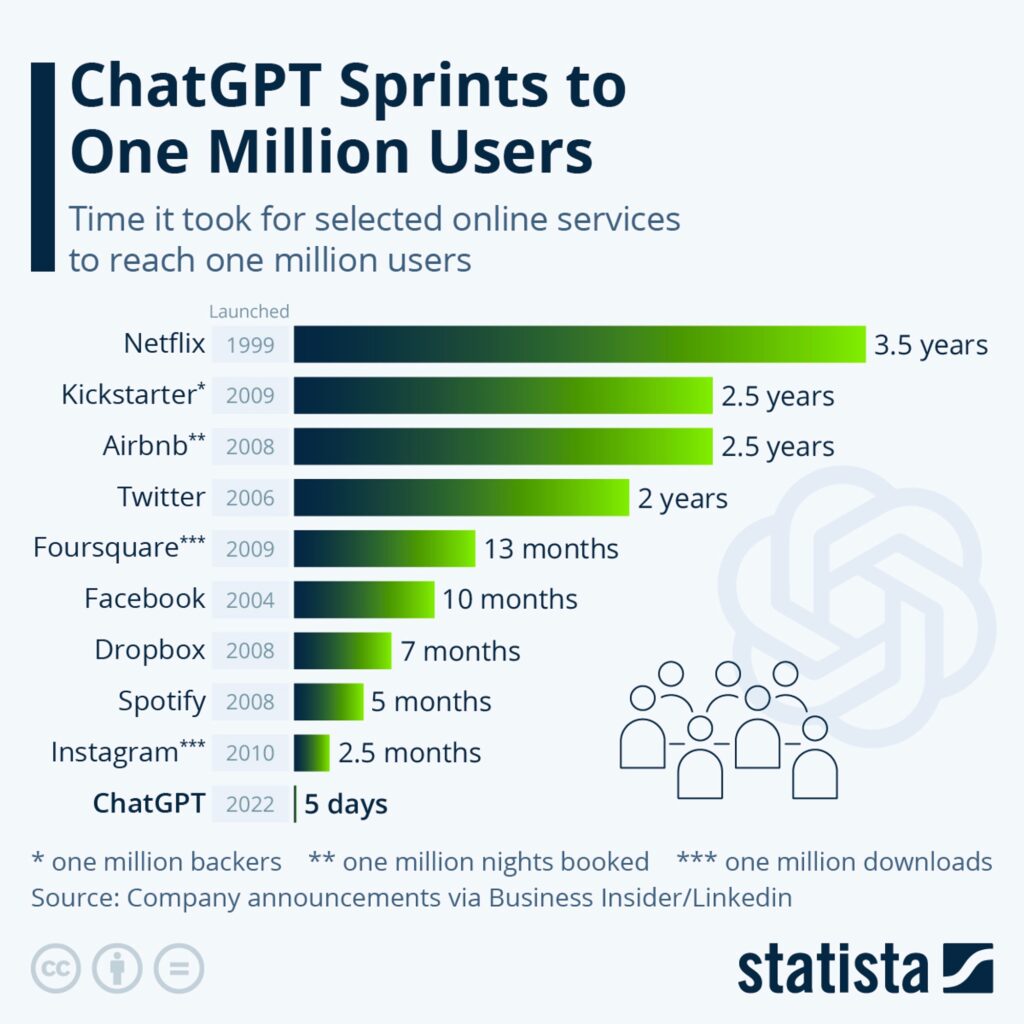

Here is an interesting statistic on the time it took for some of the popular technology applications to become popular, to hit the first million users.

If you have missed reading the Part 1, read the back story first – A post-GPT World.

Almost everyone seemed to have hit the proving ground and taken the ChatGPT interface for a ride with all kinds of amusing and esoteric requests. Open AI themselves and some college professors claim that the algorithm has successfully taken their tests and published grades on various academic qualifying exams with higher percentile scores.

You can also look at it in a different way. For an algorithm to pass an academic test, perhaps, throws more insights on the quality of the our rote education system devoid of creativity and original thinking that are often attributed to humans.

When interacting with ChatGPT, it is not surprising that we are awestruck by the response and at times by the sophistication of the language constructs used in the response. It could also be a reflection of the degrading style of human communication that is practiced today in the world of text messages and what is called the Gen Z lingo. It is at times quite refreshing to hear clear and concise answers that make sense.

Let’s try to understand what is GPT and how the algorithm works. It cannot be a mysterious blackbox in a true sense for an algorithm to receive random questions and respond back with even more mysterious answers. A little bit of transparency would always help.

What is GPT?

GPT or a Generative Pre-trained Transformer is a type of large scale language model (LLM) that are trained very large volume of text data, close to 45 TB for GPT-3, spanning multiple domains including various books, texts and around 500 billion tokens or words.

Anyone with academic interests and for a deeper understanding of the algorithm, one might have to time travel decades back to the research done by the famous American mathematician and computer science engineer, Claude Shannon on his work on Information Theory and experiments in automatic text generation by computers. I found a great explanation of Shannon’s work in this article by Cal Newport in Neworker magazine.

Where does all the investment in OpenAI mainly go? Billions of dollars have been spent on a cluster of high performance processors, including thousands of Nvidia GPUs with high scale memory and giga-scale networking capability.

Bottomline, everything depends on the data that is used to train the algorithm. Nothing more and nothing less. If the data is biased, so is the GPT’s output.

It is a different story that all these kinda tests and the feedback was used to train the next version of the language model for OpenAI to release the improvised version of GPT4 that Microsoft would soon integrate within its Office 365 applications.

Here is my take on the relevance of GPT from three different, yet, important aspects.

What can it do well?

No question about it. Technology, including AI, and ChatGPT in particular, will improve lives and have a significant impact on the future of work and the way things get done. If not for the zillions of possibilities that the world is presently going gaga over OpenAI, there are notable opportunities in areas like summarizing a large corpus of text, creating notable points of interest out of journals and documents and past legal records – or rather a more sophisticated, AI powered search.

We have already experienced Grammarly like sentence correctors for a while now and ChatGPT could enhance that writing experience. It is still a point of contention and intrigue if and when the AI algorithm can contribute better than the writing style of great authors.

As we have been saying, the Generative AI models would create a significant uptick in the present day chatbot experience and act as a blessing in disguise to get over the BOT Hangover. Instead of producing mechanical responses to the user’s questions, the Generative AI models would end up providing a far superior user experience to provide concise and pointed answers from large corpus of knowledge.

To me personally, another great application of GPT4 is the preview from Khan Academy of its learning assistant, Khanmigo and the popular language learning app, Duolingo. These are all great examples of how AI could simplify things and make our lives better, for better.

Taking away human jobs.

It is also an inevitable consequence that ChatGPT will make few jobs redundant. But, just like any other technology, the AI algorithm would help improve productivity and augment human capabilities.

Though there are use cases of ChatGPT providing a comprehensive treatment plan, the algorithm is far away, especially from the liability and regulatory point of view, replacing doctors. It can very well make their jobs easier with automatically generating medical records and notes. Or in summarizing medical reports to surface items for attention.

There is a higher probability of reducing the amount of human effort required for typical jobs of today, not necessarily completely replacing human jobs. Humans would continue to evolve and adapt to the influence of AI on our lives. This also includes a significant aspect of human brain – Consciousness – our ability to keep ourselves be aware and relevant in the dynamic world around us. How much of consciousness can be imbibed on these algorithms is a serious question by itself.

The trust and liability factor.

The last, yet most important factor is around the Trust and Liability factor. While the world is quite fascinated about the Generative nature of the algorithm and the way results are churned out of thin air, how reliable and trusted are these output?

Would we be comfortable with the mystery shrouding the internal wiring of the blackbox and how it works?

While we like to read the results we see, would we be comfortable using the results coming out of the black box directly to take actions without much concern for the consequences?

Would the creators of the algorithm and the other applications that are built on top of it, guarantee the results with reasonable levels of confidence. It would be very interesting to read the terms of use and the fine prints.

To me personally the significant element of concern is the data that is used to train the language models, the corpus of words and tokens used to train the algorithm at a significantly large scale. There are already numerous instances of inherent bias and alienations of the results that the algorithm spits out. The OpenAI’s website itself is filled with all kinds of disclaimers and terms of use. Feels like being treated like a trapeze artist in a circus.

The world is already going crazy with opinions. We don’t want yet another opinion maker in a hyper-polarized world. Do we?

Bottom-line

AI is for real and algorithm based technologies will help us get better and get things better. No question about it. A language model with incredible ability to do pattern matching on extremely large volume of texts, at scale, to understand user’s questions, to respond back in a more sensible way compared to the traditional chatbots are here to stay. With GPT, yes, next generation Chatbots are here along with various other possibilities to analyze text at scale.

Anything beyond that to undermine human intelligence? Hmm. Let’s take it with not just a pinch of salt – lots of them.